Faculty Perspectives on AI and Practical Advice

Do you have a policy on how students can or cannot use AI in your course? Why did you set this policy?

The main reasons that faculty cite when asked about their policy on AI use in their courses are to provide guidelines for students on the appropriate use of generative AI, prevent students’ misuse of the technology that would undermine their learning, and foster honesty and accountability. Some faculty ban it outright in specific assignments. For example, in a digital arts course (Diana Budde and Anna Moisiadis, UW-Milwaukee, Flexible Option), students are not allowed to use generative fills in Photoshop (a feature that uses AI to supply, edit, or remove parts of an image) because the learning goal of the assignment is for students to become familiar with and apply the features of the software. Another instructor (Erin Bauer, UW-Green Bay) recently surveyed the students in her geopolitics class asking them whether they would consider using generative AI in assignments. The survey results showed that there is “definite hesitancy” on the part of the students to use generative AI to create content for assignments because they don’t know how to go about it. On the other hand, survey respondents also pointed out that they were “comfortable using AI” to check their grammar and spelling as well as outside of coursework. Based on these survey answers, Professor Bauer developed her AI policy using UW-Green Bay's version as her model. In addition, students in her course have the option to experiment with AI in a guided format to increase their confidence with the technology.How does your policy reflect AI usage in your discipline or field?

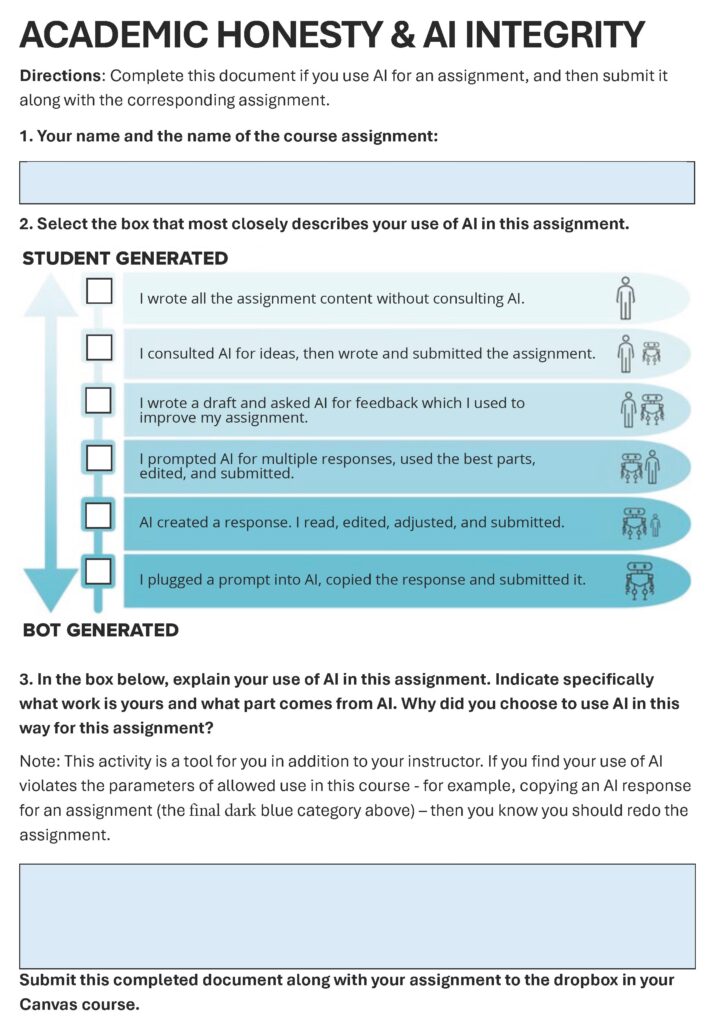

Especially in the humanities, it is sometimes felt that the use of generative AI prevents students from meaningful engagement with content. In history courses, for example, the goal is for students to engage with the past and the historical evidence in order to gain meaningful insights into the present and the human condition in general. Using generative AI in a field like history can prevent students from going through this very important process of reflection and critical analysis. An assignment written with the help of AI might superficially look like a finished product but in reality fail to show a student’s thinking process and how they arrived at their stated insights. This is not to say that generative AI can’t be helpful in many situations or fields. For example, in sustainability, AI can allow students to identify and process far more data than they would be able to otherwise. Mark Starik, who teaches in the Sustainable Management program, says AI can be a helpful tool in areas like the circular economy, organizational and societal resilience, energy efficiency and renewable energy, social justice, and systemic change. In the digital arts course mentioned above, students are asked to analyze a piece of human-made digital art, describe in detail how they think it was made, and then, based on their description, practice using AI to recreate the artwork while noting the limitations of AI. Similar to what we heard about AI in history courses, using AI in art is criticized as making use of a “thoughtless shortcut” in what is supposed to be a creative process of the human mind with the goal of creating a piece of art. AI is also seen as devaluing art and the work of artists by making available huge repositories of art that is not “insightful, empathic, or interesting” like human-made art. Erin Bauer, the geopolitics instructor, points to some wider-ranging concerns about AI: its extensive use of energy, the impacts on the workforce and society’s mental health, the spread of misinformation, and threats to national security, all of which underscore the need for institutions and organizations to develop policies to ensure the safe use of the technology. Providing recommendations and guidance to today’s students on how and where to use it in their academic work will prepare them for their future careers. In today’s work environments, AI-enhanced applications are increasingly being used, oftentimes to increase efficiency and productivity and help with the generation of routine communication. When students enter the workforce, they will be expected to have familiarity with these new tools and be able to critically evaluate them. A field like applied computing might be more likely to employ generative AI. Professor John Muraski (UW-Oshkosh) teaches a project management course in the Applied Computing program and is a strong advocate for using generative AI to build students’ skills for the workplace. In his policy, he states where students can use AI tools (brainstorming or idea generation) and where they can't (writing discussion responses, case study resolutions). Many individual assignments include specific instructions on where students should use generative AI. In addition, students are asked to reflect on and explain their use of AI in writing. Our last example of AI usage might not be field-specific but is an excellent example of how you can empower your students to become responsible and selective users of generative AI. Todd Wilkinson (UW-River Falls), who teaches in the Health and Wellness Management program, provides a list of allowable and non-allowable uses of AI in his class, and for each use, gives a rationale as well as practical advice. If students decide to use AI for any assignments in this class, they are asked to self-assess their use and explain why and how they used it. For the self-assessment, they use the checklist below to indicate the level of AI involvement in their work: AI Self-Assessment for Students, created by Jennifer Jaworski, OPLR; based on a graphic by Christina DiMicelli, Pinkerton Academy

AI Self-Assessment for Students, created by Jennifer Jaworski, OPLR; based on a graphic by Christina DiMicelli, Pinkerton Academy